[Hilarious alternate title: Dealing with SEO: How to go from SE-No to SE-Oh Yeh.]

I wrote this article back in 2014 for our agency’s blog. It was never published – I suspect our sales & comms people didn’t like it as it conflicted with some of our service offerings.

While it reads a bit dated, I think the core tenets are still more or less correct, and I just thought the title was very funny, so here we are.

SEO? More like SE-NO!

Until social networking came along, search engine optimisation (SEO) was the undisputed king of web buzzwords. If you weren’t doing SEO, then you were crazy and your website – and by extension, your business – was going nowhere.

SEO now has to vie for mindshare against a social media strategy, but it has a long legacy and thus is still heavily entrenched in the minds of anyone who is trying to do anything in the online space.

If you’re running a website for your business, and you’re not a technical person familiar with the intricacies of SEO, you might have been concerned about this – how do you make your website stand out? How do you set things up so that when someone types in the name of your business or your industry, you show up on the first page?

In short – do you need SEO?

Well, vast hordes of SEO specialists, agencies, companies and consultants have sprung up over the preceding years to help answer these questions. Sounds promising, right? In an increasingly knowledge-based economy, it’s obviously helpful to have a bunch of people who have devoted themselves to becoming experts on a topic, so you can leverage their abilities. Great!

Unfortunately, things aren’t great in the world of SEO. Things are messy. Let’s have a look at why.

What is SEO?

First up – what the heck is SEO, anyway? “Search engine optimisation” sounds pretty clear-cut – everyone needs to be on search engines!. But what actually is it? When someone “does SEO”, what exactly are they doing?

The short answer is: it could be anything. “SEO” is not the sort of hard, technical term that is favoured by computer nerds like us. There’s no specification, there’s no regulations, there’s no protocols – there’s not even an FM to R.

In a nutshell, SEO means making changes to a website to improve how search engines react to it. It can be as simple as making sure you have a title on your page – for example, if your business is a coffee shop, you might want to make sure you have the words “coffee shop” somewhere in the title. It can be complicated, too – like running analyses on the text content on your site to measure keyword density.

Changes can also be external. One of the biggest things that impacts a site’s rankings in search results is how many other people on the Internet are linking to you. So one SEO strategy is to run around the Internet and make a bunch of links back to your site. (We’ll talk about this a bit more later.)

Other technical things might influence SEO as well. Google recently announced that whether or not a site used HTTPS (the secure padlock dealie that means your website is secure for credit card transactions) would start having some impact on rankings.

As we can see here, there’s a bunch of different things that can affect your SEO – and I’ve only listed a handful of them. There are more – and they all are interrelated.

As if that wasn’t complicated enough, there’s something else that affects where you end up in search results – the person who is searching. Where you are will change things – if I’m searching for coffee shops, I’m more likely to get results that are geographically closer to me. If I’ve done a lot of searches for certain terms, I’m more likely to to see results based on those terms.

If you have your own website and regularly visit it, it is possible that will affect the rankings as you see them. If you search for yourself you might see your ranking up higher than someone else doing the exact same search located in the next street – or the next town, state, or country.

What’s the practical upshot?

In short: SEO is complicated. There are lots of variables, and they are hard to control.

That’s not even the really bad part: the only people who know exactly how the search ranking system works are the search engines themselves. No matter what you do, the outcome is still 100% determined by whatever is going on with the search engines on any particular day.

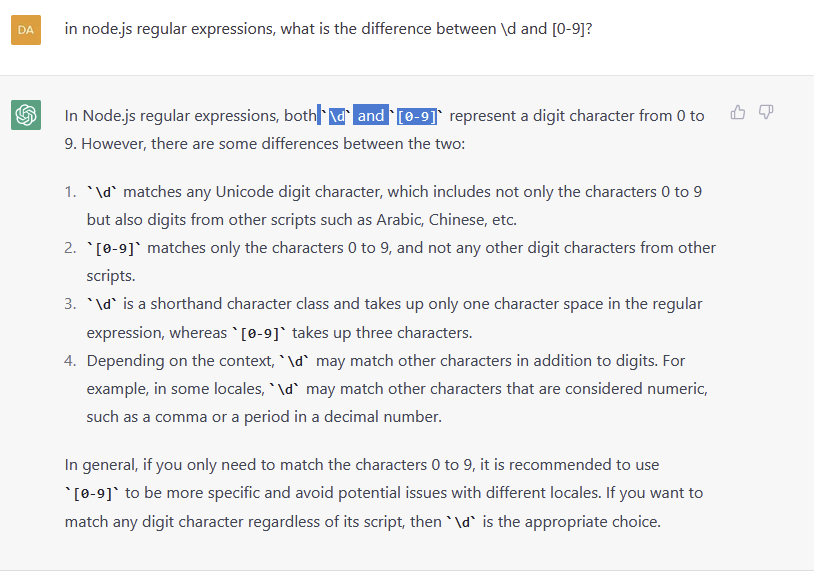

No matter what you’re told, anything anyone knows about how to “do SEO” comes from one of two sources: information made publicly available by search engines, and from reverse engineering search engine behaviour by experimentation.

You might invest large amounts of time and effort (and money) in trying to execute a particular SEO strategy, only to have Google come along the next day and announce they’ve changed everything, and your investment is largely wasted.

SEO is a moving target. Things are constantly in flux, in no small part due to the huge number of people attempting to game the system by any means possible – in a world where a top ranking in a competitive search result can mean a huge increase in sales in a very short time, getting an edge is a big deal. And many slightly more nefarious techniques – usually dubbed “black hat SEO” – have emerged, which in many cases can do massive damage to your rankings.

As if all that wasn’t traumatic enough… your ranking is something that evolves over time. A new website won’t appear in search results immediately at all; it might take a few days to show up, and in most circumstances will be low in rankings. If you’re in a competitive space, it might take you months to even register on the first few pages of results.

This means it is very, very hard to do any sort of significant or reliable experiments with SEO in a short timeframe. You can’t change a few words and the instantly check how they affect your rankings. You have to wait – a long time – to see if it has any effect. During that time, there will be any number of other changes that have occurred, making it hard to confirm if your experiment worked.

Doing SEO scientifically is hard. Measuring cause and effect is hard enough in small experiments when there are few variables and they can be tightly controlled. In SEO there are many variables, constantly in flux, known only to the clever engineers that write and evolve the ranking algorithms – the secret sauce that drives how every search engine works.

I said what’s the practical upshot!

Oh, right. Well, the practical upshot is that the world of SEO providers is full of people over-promising and under-delivering.

This is the big risk of paying for SEO services. Because it’s such a vague, hand-waving term that encompasses so many different areas, there are, sadly, a number of operators in the space that use it as an opportunity to provide services that are not quantified or qualified in any meaningful way.

Because of the complexity of the systems involved, it is practically impossible to deliver a promise of results in the SEO world. You might get promised a first page search result, but it is extremely difficult to deliver this, especially in competitive spaces – if you’re trying to get your coffee shop on the first page of Google results for the term ‘coffee shop’, you’ve got a long road ahead of you.

Worse, there are black hat operators that will do things that look like a great idea in the short term, but may end up having huge negative ramifications. “Negative SEO” is one of the more recent examples.

As a result, there are plenty of witch doctors offering SEO snake oil. Promises of high rankings and lack of delivery abound – followed by “oh, we need more money” or “you need to sign up for six months to see results”.

One only needs to look at the SEO sub-forum on Whirlpool – one of the most popular communities in Australia for those seeking technical advice – to see what a train wreck the current SEO market is. At the time of writing there’s a 96 page thread at the top with unsatisfied customers of one particular agency. There are stacks of warnings about other agencies. Scroll through and have a look.

Customers of many SEO agencies are not happy, and it’s because they’re paying for something they don’t really understand without getting crystal clear deliverables.

The situation is so bad that the second sentence on Google’s own “Do you need an SEO?” page states:

Deciding to hire an SEO is a big decision that can potentially improve your site and save time, but you can also risk damage to your site and reputation.

Some other interesting terms used on that page: “unethical SEOs”, “overly aggressive marketing efforts”, “common scam”, “illicit practice”… indeed, the bulk of the document explains all the terrible things you need to watch out for when engaging an SEO.

(I should stress that this is not a general statement that encompasses all those who perform SEO. There are many smart and dedicated people out there that live on the cutting edge of search engine technology, doing only white hat work, delivering great things for their clients. The hard part is finding them in the sea of noise.)

Cool story. What does this mean for me?

Back to the original question – do you need SEO?

There’s no right answer. It’s a big question that encompasses a wide range of stuff. Without looking at your specific situation it’s hard to tell how much effort you should put into SEO at any given point in time.

Remember: there’s no clear-cut magic SEO bullet that will do exactly what you want. But one thing is for sure – someone will happily take your money.

If you decide to engage someone to help optimise your website for search, here’s a quick list of things to pay attention to:

- Carefully read Google’s “Do you need an SEO?” document, paying particular attention to the dot points at the bottom.

- Establish clear deliverables that you understand – you need to make sure that you know what you’re paying for, otherwise what you get will be indistinguishable from nothing.

- Tie any payments (especially ones involving large amounts) to performance metrics – but don’t be surprised if they’re not interested in doing this. (What does that tell you?)

- Remember that anything that is not a simple content update that you can do yourself might have other costs – for example, changing page layout or adding new tags might require you to get web developers on board.

- If you’re building a new site from scratch, make sure your developers are factoring in SEO right from the outset. Almost any decent developer will be using a framework or software that takes SEO into consideration, and as long as they – and you – are paying some attention to Google’s SEO Starter Guide (EDIT: 2018 version is here: https://support.google.com/webmasters/answer/7451184?hl=en ) you’ll end up in a much better position.

- Strongly consider search engine marketing (SEM) instead. SEM is the thing where you pay companies like Google money to have your website appear in search results as ads, based on specific terms. The Google programme – AdWords – gives you incredible control over when your ads appear, and you also get excellent data back from any campaigns. With AdWords you can actually effectively measure the results of your work – so you can scientifically manage your campaigns, carefully tracking how every one of your marketing dollars is performing.