Dropbox is one of the very few applications I’ve installed that has completely changed the way I use computer systems.

Under most circumstances, I no longer have to think about having to deal with the irritating sending and receiving of files, or stuffing them onto some other system to be retrieved later. I can just save everything onto my local disk – exactly as I’d like to – and know that it will magically pop up at some later point on every other PC that I own. I take photos on my phone knowing they’ll be stuffed onto Dropbox for later retrieval on my PC – indeed, I no longer even think about “copying photos off my phone”, because it just happens.

There are, of course, a few limitations. For example, it’s hard to do this with large volumes of data, simply because the upstream on most broadband plans is woeful. In those cases typically reaching for the USB disk or stuffing bytes onto my phone is a better alternative.

Of course, the other limitation is the few paltry gigabytes of storage you get on the free plan. If you’re dedicated though, it’s pretty trivial to boost this by quite a bit – referring friends, linking devices, all that sort of stuff. At the time of writing I have 4.2GB available on my Dropbox, without spending a cent.

And now, perhaps inevitably, I find myself in the situation of wondering why the hell all my files aren’t on Dropbox. It’s almost like they had some sort of insidious plan to get me hooked on their awesome system by giving me a taste for free.

Unfortunately I don’t really want to use Dropbox. Not really because I don’t want to pay for it, but because I have never really liked their security model. I want my files to be encrypted/decrypted client side.

I suspect the main reason they don’t want to offer this is because it would remove a lot of the basic functionality that the vast majority of users take advantage of regularly – the ability to access and share files quickly and easily via the web interface in particular. Not to mention the support nightmare that would certainly ensue when those users lose their encryption keys and wonder why all their files are now a bunch of unrecoverable gibberish.

In the post-Snowden world this is possibly an even bigger deal. I don’t really have concerns that faceless government agents are going to be poring through my files – but it’s even clearer that you ultimately need to be responsible for the security of putting your data online.

I’ve tried a few of the European alternatives to Dropbox – Wuala and SpiderOak most recently. Their security policies look good, they (appear to) use client side encryption, and they’re located in Europe, so I can rest somewhat comfortably knowing they’re not subject to secret NSA orders or whatever.

With the possible exception of Google Drive (which of course is subject to the same woes as Dropbox), the other services I’ve tried I found almost completely unusable compared to the elegance, simplicity, and sheer Just Workiness of Dropbox. I tried – I really did. I wanted to like them. I’m not sure if it’s all that security stuff just getting in the way of making it a good experience, but they just feel clunky and awkward to use, painful to set up, and I was generally just thinking “why am I doing this?” the whole time.

I’m a big believer in voting with your wallet. It’s not like there aren’t other options. But Dropbox is just so damn convenient in so many different ways that I can feel myself slowly caving and abandoning any lofty principles just so I can go back to Getting Shit Done.

There are two things that Dropbox could do to get me off the fence immediately.

1) Introduce client side encryption/decryption into the Dropbox client. While it remains closed source I can imagine many would still (rightfully) be hesitant to trust it (how would you know they’re not capturing your encryption keys?), but a nod in that direction would be enough for me.

2) Introduce an option to limit storage of my files on Amazon clouds in different regions. I am not intricately familiar with how Amazon’s cloudy stuff works, but it seems that this would not be a complicated feature. Allow me to opt to have my files stored on S3 within particular geographic regions. I can imagine this would be a big deal for many government services who might want to use Dropbox but might be subject to limitations on where their data can be physically stored, and for the security nerds, getting out of the reach of the NSA (yes, yes, subject to their ability to compromise any site anyway), it would be a neat service.

What I suspect I’ll end up doing is signing up for a plan and then encrypting all my stuff locally with gnupg and treating it more like a backup archival system rather than a live working filesystem.

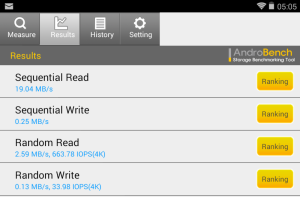

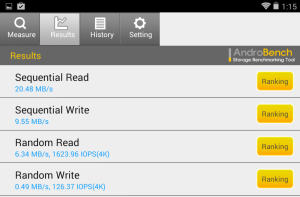

However, if you can avoid it, it seems safest to not let your devices drop below ~3.5GB free (at least on a 16GB device).

However, if you can avoid it, it seems safest to not let your devices drop below ~3.5GB free (at least on a 16GB device).